Note

Click here to download the full example code

Model Explanation¶

Out:

Running DummyClassifier()

accuracy: 0.338 recall_macro: 0.333 precision_macro: 0.113 f1_macro: 0.169

=== new best DummyClassifier() (using recall_macro):

accuracy: 0.338 recall_macro: 0.333 precision_macro: 0.113 f1_macro: 0.169

Running GaussianNB()

accuracy: 0.962 recall_macro: 0.964 precision_macro: 0.967 f1_macro: 0.964

=== new best GaussianNB() (using recall_macro):

accuracy: 0.962 recall_macro: 0.964 precision_macro: 0.967 f1_macro: 0.964

Running MultinomialNB()

accuracy: 0.954 recall_macro: 0.957 precision_macro: 0.959 f1_macro: 0.957

Running DecisionTreeClassifier(class_weight='balanced', max_depth=1)

accuracy: 0.624 recall_macro: 0.650 precision_macro: 0.456 f1_macro: 0.525

Running DecisionTreeClassifier(class_weight='balanced', max_depth=5)

accuracy: 0.872 recall_macro: 0.880 precision_macro: 0.883 f1_macro: 0.871

Running DecisionTreeClassifier(class_weight='balanced', min_impurity_decrease=0.01)

accuracy: 0.917 recall_macro: 0.920 precision_macro: 0.925 f1_macro: 0.918

Running LogisticRegression(C=0.1, class_weight='balanced', max_iter=1000)

accuracy: 0.977 recall_macro: 0.979 precision_macro: 0.978 f1_macro: 0.978

=== new best LogisticRegression(C=0.1, class_weight='balanced', max_iter=1000) (using recall_macro):

accuracy: 0.977 recall_macro: 0.979 precision_macro: 0.978 f1_macro: 0.978

Running LogisticRegression(class_weight='balanced', max_iter=1000)

accuracy: 0.970 recall_macro: 0.970 precision_macro: 0.972 f1_macro: 0.970

Best model:

LogisticRegression(C=0.1, class_weight='balanced', max_iter=1000)

Best Scores:

accuracy: 0.977 recall_macro: 0.979 precision_macro: 0.978 f1_macro: 0.978

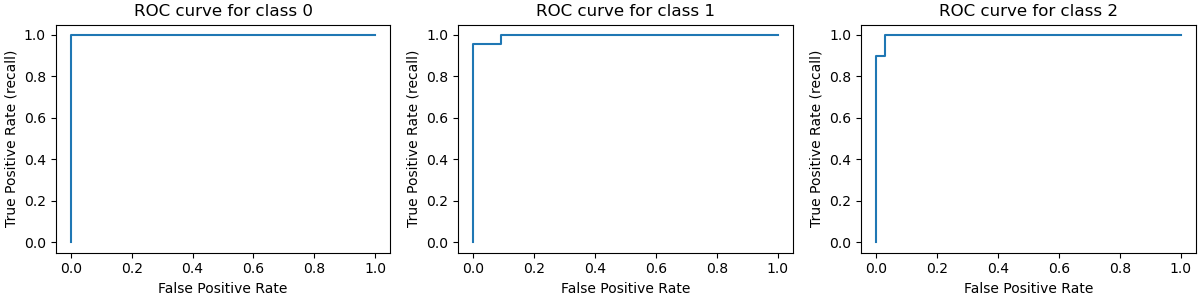

precision recall f1-score support

0 1.00 1.00 1.00 12

1 1.00 0.96 0.98 23

2 0.91 1.00 0.95 10

accuracy 0.98 45

macro avg 0.97 0.99 0.98 45

weighted avg 0.98 0.98 0.98 45

[[12 0 0]

[ 0 22 1]

[ 0 0 10]]

/home/circleci/project/dabl/plot/utils.py:378: UserWarning: FixedFormatter should only be used together with FixedLocator

ax.set_yticklabels(

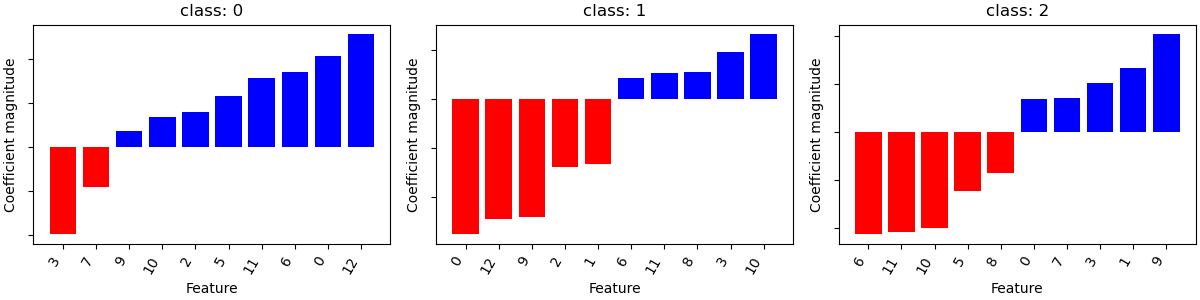

from dabl.models import SimpleClassifier

from dabl.explain import explain

from sklearn.datasets import load_wine

from sklearn.model_selection import train_test_split

wine = load_wine()

X_train, X_test, y_train, y_test = train_test_split(wine.data, wine.target)

sc = SimpleClassifier()

sc.fit(X_train, y_train)

explain(sc, X_test, y_test)

Total running time of the script: ( 0 minutes 0.727 seconds)