Note

Click here to download the full example code

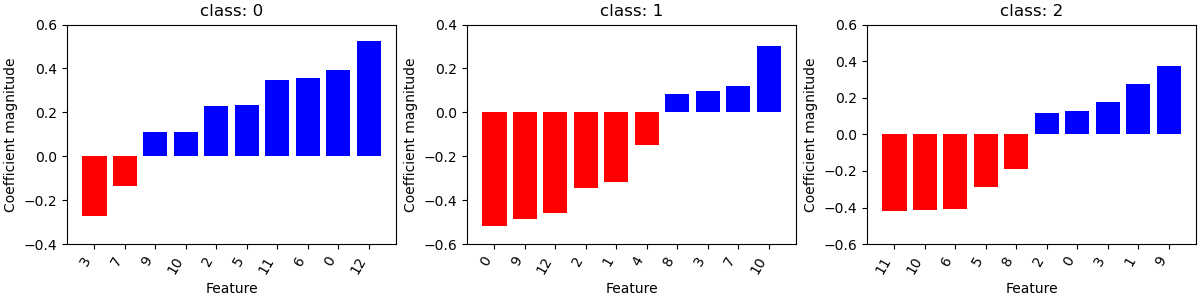

Model Explanation¶

Running DummyClassifier()

accuracy: 0.383 recall_macro: 0.333 precision_macro: 0.128 f1_macro: 0.185

=== new best DummyClassifier() (using recall_macro):

accuracy: 0.383 recall_macro: 0.333 precision_macro: 0.128 f1_macro: 0.185

Running GaussianNB()

accuracy: 0.970 recall_macro: 0.973 precision_macro: 0.973 f1_macro: 0.971

=== new best GaussianNB() (using recall_macro):

accuracy: 0.970 recall_macro: 0.973 precision_macro: 0.973 f1_macro: 0.971

Running MultinomialNB()

accuracy: 0.932 recall_macro: 0.936 precision_macro: 0.943 f1_macro: 0.936

Running DecisionTreeClassifier(class_weight='balanced', max_depth=1)

accuracy: 0.571 recall_macro: 0.609 precision_macro: 0.439 f1_macro: 0.486

Running DecisionTreeClassifier(class_weight='balanced', max_depth=5)

accuracy: 0.932 recall_macro: 0.935 precision_macro: 0.936 f1_macro: 0.933

Running DecisionTreeClassifier(class_weight='balanced', min_impurity_decrease=0.01)

accuracy: 0.925 recall_macro: 0.931 precision_macro: 0.928 f1_macro: 0.926

Running LogisticRegression(C=0.1, class_weight='balanced', max_iter=1000)

accuracy: 0.993 recall_macro: 0.993 precision_macro: 0.994 f1_macro: 0.993

=== new best LogisticRegression(C=0.1, class_weight='balanced', max_iter=1000) (using recall_macro):

accuracy: 0.993 recall_macro: 0.993 precision_macro: 0.994 f1_macro: 0.993

Running LogisticRegression(C=1, class_weight='balanced', max_iter=1000)

accuracy: 0.993 recall_macro: 0.993 precision_macro: 0.994 f1_macro: 0.993

Best model:

LogisticRegression(C=0.1, class_weight='balanced', max_iter=1000)

Best Scores:

accuracy: 0.993 recall_macro: 0.993 precision_macro: 0.994 f1_macro: 0.993

precision recall f1-score support

0 0.92 1.00 0.96 12

1 0.95 0.90 0.92 20

2 0.92 0.92 0.92 13

accuracy 0.93 45

macro avg 0.93 0.94 0.94 45

weighted avg 0.93 0.93 0.93 45

[[12 0 0]

[ 1 18 1]

[ 0 1 12]]

/home/circleci/project/dabl/explain.py:45: UserWarning: Can't plot roc curve, install sklearn 0.22-dev

warn("Can't plot roc curve, install sklearn 0.22-dev")

from dabl.models import SimpleClassifier

from dabl.explain import explain

from sklearn.datasets import load_wine

from sklearn.model_selection import train_test_split

wine = load_wine()

X_train, X_test, y_train, y_test = train_test_split(wine.data, wine.target)

sc = SimpleClassifier()

sc.fit(X_train, y_train)

explain(sc, X_test, y_test)

Total running time of the script: ( 0 minutes 0.623 seconds)