Note

Go to the end to download the full example code

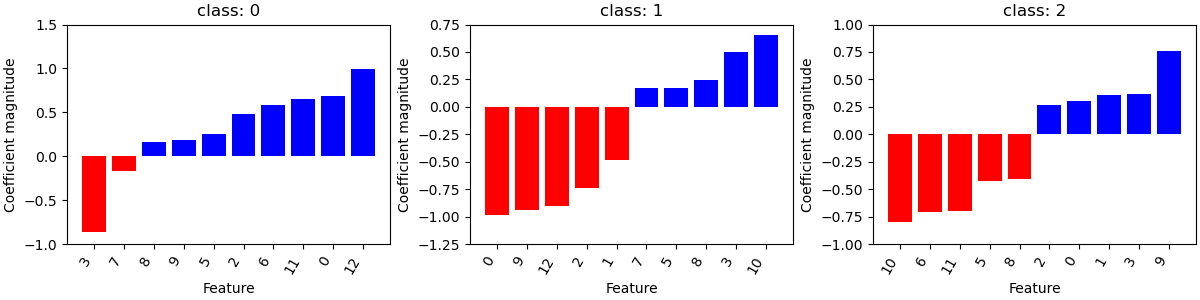

Model Explanation¶

Running DummyClassifier()

accuracy: 0.376 recall_macro: 0.333 precision_macro: 0.125 f1_macro: 0.182

=== new best DummyClassifier() (using recall_macro):

accuracy: 0.376 recall_macro: 0.333 precision_macro: 0.125 f1_macro: 0.182

Running GaussianNB()

accuracy: 0.970 recall_macro: 0.972 precision_macro: 0.973 f1_macro: 0.971

=== new best GaussianNB() (using recall_macro):

accuracy: 0.970 recall_macro: 0.972 precision_macro: 0.973 f1_macro: 0.971

Running MultinomialNB()

accuracy: 0.963 recall_macro: 0.964 precision_macro: 0.967 f1_macro: 0.965

Running DecisionTreeClassifier(class_weight='balanced', max_depth=1)

accuracy: 0.571 recall_macro: 0.600 precision_macro: 0.417 f1_macro: 0.477

Running DecisionTreeClassifier(class_weight='balanced', max_depth=5)

accuracy: 0.925 recall_macro: 0.923 precision_macro: 0.940 f1_macro: 0.926

Running DecisionTreeClassifier(class_weight='balanced', min_impurity_decrease=0.01)

accuracy: 0.902 recall_macro: 0.902 precision_macro: 0.917 f1_macro: 0.905

Running LogisticRegression(C=0.1, class_weight='balanced', max_iter=1000)

accuracy: 0.985 recall_macro: 0.987 precision_macro: 0.987 f1_macro: 0.986

=== new best LogisticRegression(C=0.1, class_weight='balanced', max_iter=1000) (using recall_macro):

accuracy: 0.985 recall_macro: 0.987 precision_macro: 0.987 f1_macro: 0.986

Running LogisticRegression(C=1, class_weight='balanced', max_iter=1000)

accuracy: 1.000 recall_macro: 1.000 precision_macro: 1.000 f1_macro: 1.000

=== new best LogisticRegression(C=1, class_weight='balanced', max_iter=1000) (using recall_macro):

accuracy: 1.000 recall_macro: 1.000 precision_macro: 1.000 f1_macro: 1.000

Best model:

LogisticRegression(C=1, class_weight='balanced', max_iter=1000)

Best Scores:

accuracy: 1.000 recall_macro: 1.000 precision_macro: 1.000 f1_macro: 1.000

precision recall f1-score support

0 1.00 1.00 1.00 14

1 1.00 0.95 0.98 21

2 0.91 1.00 0.95 10

accuracy 0.98 45

macro avg 0.97 0.98 0.98 45

weighted avg 0.98 0.98 0.98 45

[[14 0 0]

[ 0 20 1]

[ 0 0 10]]

/home/circleci/project/dabl/explain.py:45: UserWarning: Can't plot roc curve, install sklearn 0.22-dev

warn("Can't plot roc curve, install sklearn 0.22-dev")

from dabl.models import SimpleClassifier

from dabl.explain import explain

from sklearn.datasets import load_wine

from sklearn.model_selection import train_test_split

wine = load_wine()

X_train, X_test, y_train, y_test = train_test_split(wine.data, wine.target)

sc = SimpleClassifier()

sc.fit(X_train, y_train)

explain(sc, X_test, y_test)

Total running time of the script: (0 minutes 0.698 seconds)